by Rain Chu | 8 月 7, 2025 | 未分類

gpt‑oss 教學,可以在 16 GB 筆電上免費使用 OpenAI 的開源 gpt‑oss‑20B / 120B GPT 模型,2025/8/5 OpenAI 終於推出的 gpt‑oss(包括 gpt‑oss‑20B 與 gpt‑oss‑120B)簡直是福音!這些開源模型支持在具備足夠資源的電腦上離線運行,完全不需要存取 OpenAI 伺服器,既保護資料隱私,又零使用量限制。

GPT‑OSS 模型概覽

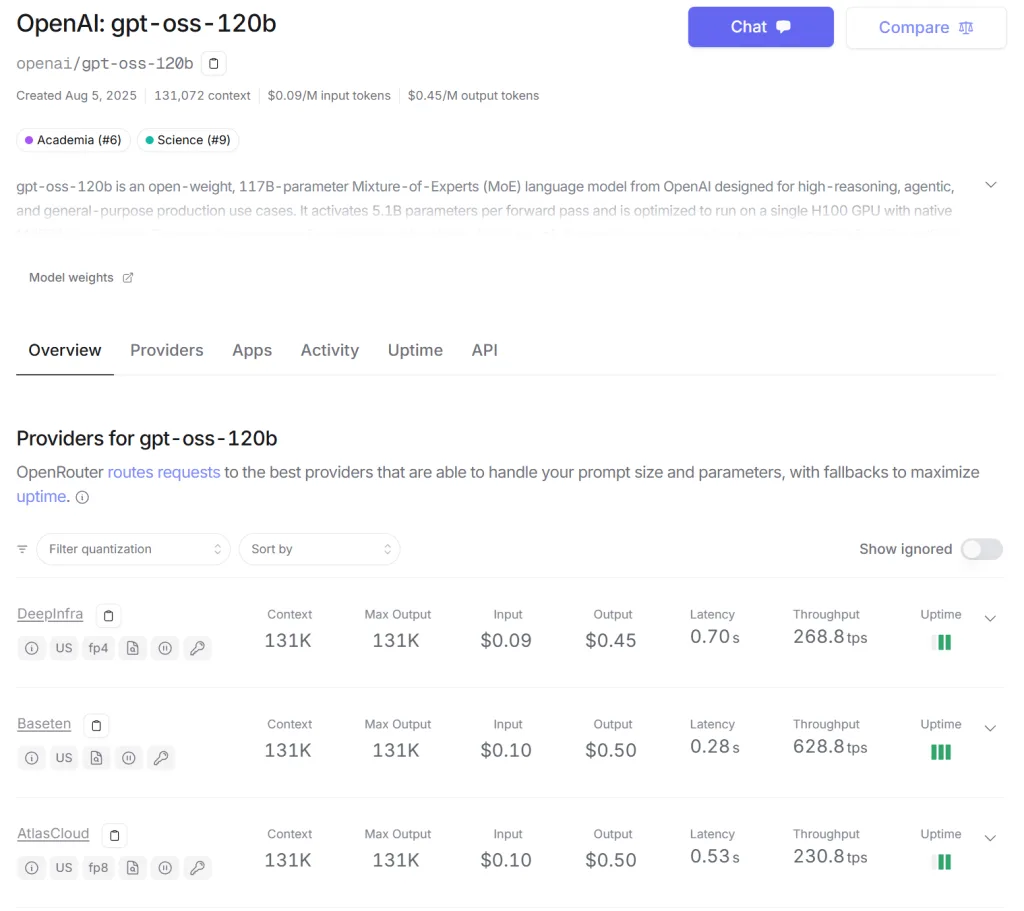

- gpt‑oss‑120B

1170 億參數的強大模型,在主要推理基準上接近 OpenAI 的 o4‑mini 表現,同時支援 chain-of-thought 規劃,適用於需要高級推理能力的場景。

- gpt‑oss‑20B

約 210 億參數,效能與 o3‑mini 相當,卻可在只需 16 GB 記憶體的裝置上運行,是輕量級的最佳選擇。

兩者皆採用 Mixture-of-Experts 架構(MoE),對每個 token 只啟用一部分參數,有效節省記憶體與運算資源。

模型授權為 Apache 2.0,開放商業使用、修改與分發。

為什麼它值得推薦?

- 真·免費 & 無使用限制:完全無需訂閱、不計費,也無 API 次數限制。

- 離線運行,資料更安全:不連網執行,所有運算都在本地完成,隱私無虞。

- 高效能與實用性並重:gpt‑oss‑20B 適合筆電、家庭工作站;gpt‑oss‑120B 則適用於高性能 GPU 主機。

如何開始在本地使用 GPT‑OSS?

以下以 Ollama 為例,快速上手流程:

- 安裝 Ollama(適用於 Windows / macOS / Linux)。

- 使用指令下載模型:ollama pull gpt‑oss:20b

- 啟動模型聊天介面:ollama run gpt‑oss:20b

- 要完全離線,也可在 Ollama 設定中啟用「飛航模式」。

ollama pull gpt‑oss:20b # 適合 16 GB 裝置

ollama pull gpt‑oss:120b # 適用於 GPU ≥ 60 GB 設備

對部分硬體較低端的使用者,也可透過像 llama.cpp 加上 GGUF 精簡版模型運行,建議至少 14 GB 記憶體以獲得流暢回應。

歸納總結

| 模型版本 | 適用裝置 | 模型特性 |

|---|

| gpt‑oss‑20B | 筆電 / Mac 開發者 | 約 210 億參數、效能近 o3‑mini |

| gpt‑oss‑120B | 高階工作站 / GPU 主機 | 約 1170 億參數、推理接近 o4‑mini |

兩者皆具備開源特性,可離線運行、免費使用、無使用量限制,非常適合自主部署與隱私需求高的專案。此外,也可透過 Hugging Face、Azure、AWS 等多平台取得模型。

同場加映

- 可以用於 mac mini 建議用 oss-120B 放在 MAC 128G 共同記憶體以上的機器,可以有每秒 40 token

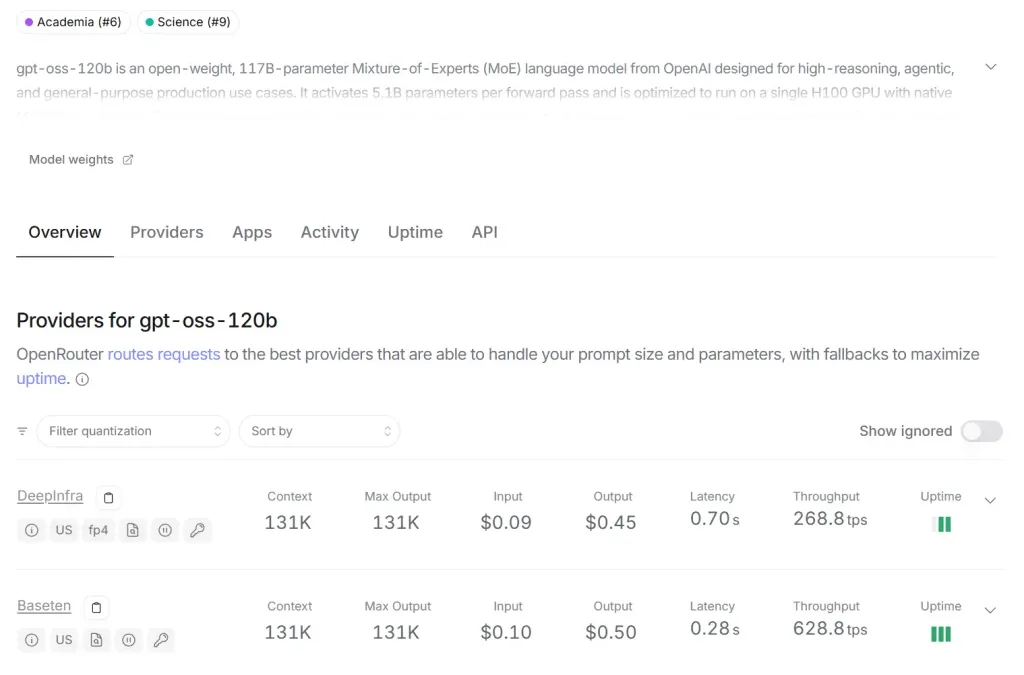

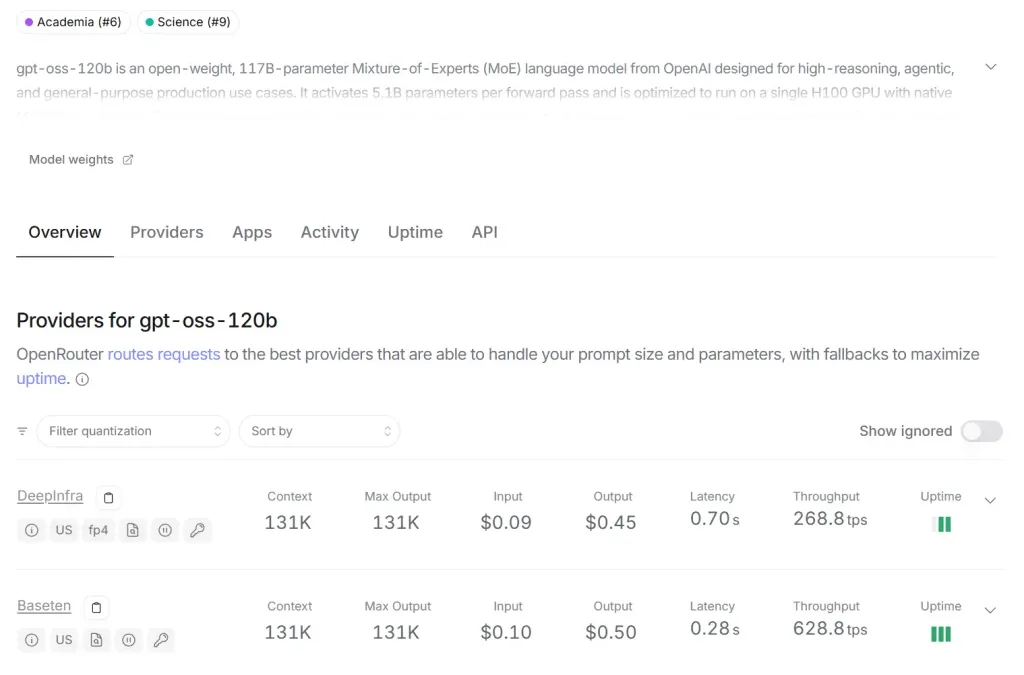

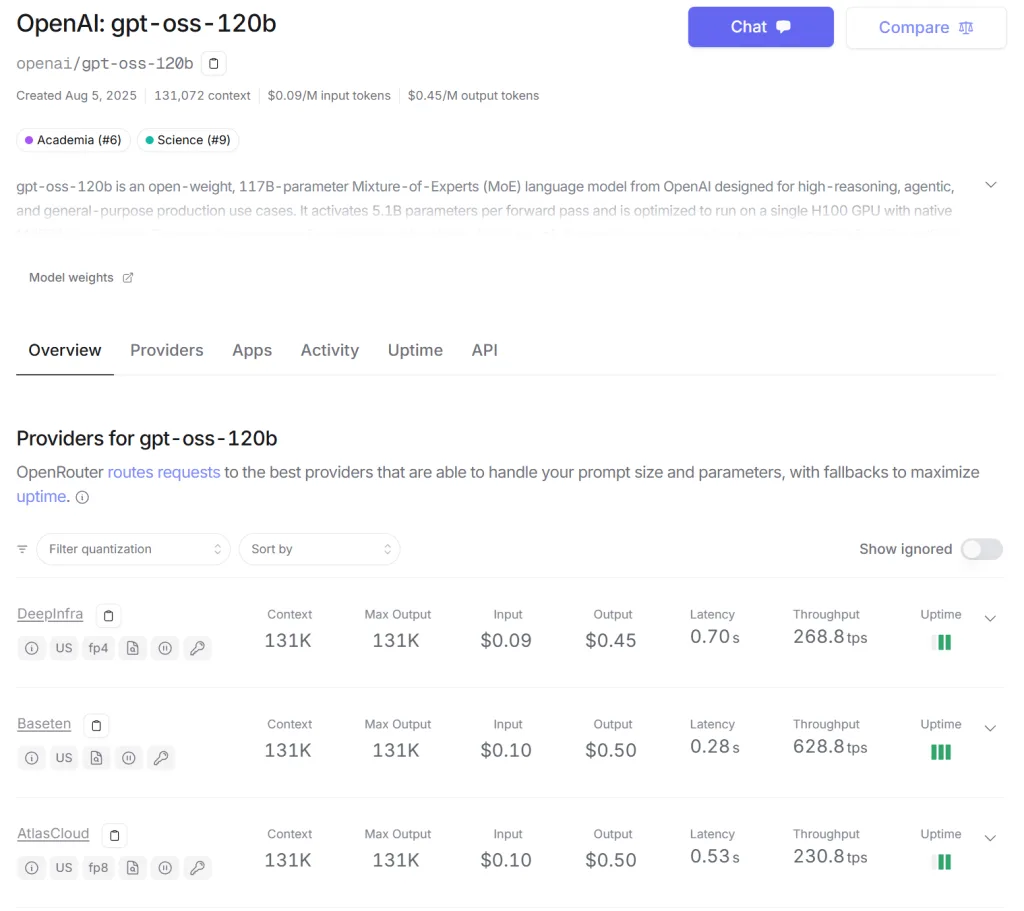

- 不想買機器的,可以先用 openrouter 或是 Groq

- 內建有 BrowserUse,Python,MCP

- 可以控制推理強度

- MoE混合推理模型

- 支援企業級應用 vLLM,SGLang

- 可以用於 Agent,微調

- 原生支援MXFP4,ollama等無須轉換

參考資料

https://github.com/openai/gpt-oss

by Rain Chu | 4 月 26, 2025 | 3D, AI

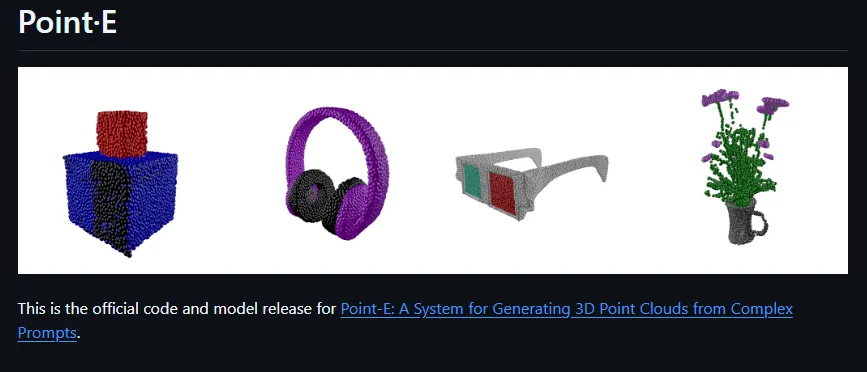

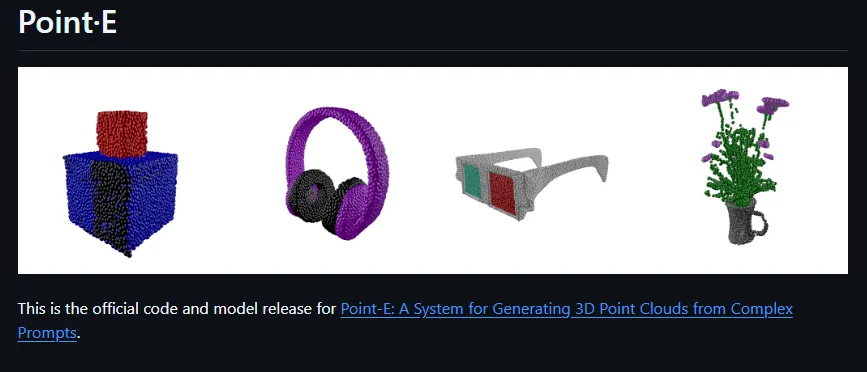

OpenAI 推出了兩款開源的 3D 建模工具:Point-E 和 Shap-E,分別專注於從文字或圖片生成 3D 模型,接下來介紹這兩個模型的核心特性、技術架構、使用方法,並比較它們的優缺點,協助您選擇最適合的工具。

🔍 Point-E:快速生成 3D 點雲的 AI 工具

📌 核心特點

- 輸入類型:支援文字描述或 2D 圖片。

- 輸出格式:生成彩色點雲(point cloud),可轉換為網格(mesh)。

- 處理速度:在單張 GPU 上約需 1–2 分鐘。

- 技術架構:採用兩階段擴散模型,先生成合成視圖,再生成點雲。

- 應用場景:快速原型設計、教育用途、遊戲開發等。

🧪 使用方法

- 安裝:

生成點雲:

🧠 Shap-E:生成高品質 3D 隱式模型的 AI 工具

📌 核心特點

- 輸入類型:支援文字描述或 2D 圖片。

- 輸出格式:生成隱式函數,可渲染為帶紋理的網格或神經輻射場(NeRF)。

- 處理速度:在單張 GPU 上可於數秒內生成。

- 技術架構:先訓練編碼器將 3D 資產映射為隱式函數參數,再訓練條件擴散模型生成 3D 模型。

- 應用場景:高品質 3D 資產創建、AR/VR 應用、3D 列印等。

🧪 使用方法

- 安裝:

生成 3D 模型:

- 使用

sample_text_to_3d.ipynb 或 sample_image_to_3d.ipynb 範例筆記本。

- 可將生成的模型導出為常見的 3D 格式,供進一步編輯或列印。

⚖️ Point-E 與 Shap-E 的比較

| 特性 | Point-E | Shap-E |

|---|

| 輸入類型 | 文字、圖片 | 文字、圖片 |

| 輸出格式 | 彩色點雲,可轉為網格 | 隱式函數,可渲染為網格或 NeRF |

| 處理速度 | 約 1–2 分鐘 | 數秒內 |

| 模型架構 | 兩階段擴散模型 | 編碼器 + 條件擴散模型 |

| 輸出品質 | 中等,適合快速原型設計 | 高品質,適合精細 3D 資產創建 |

| 應用場景 | 快速原型、教育、遊戲開發 | 高品質 3D 資產、AR/VR、3D 列印等 |

🧩 適用場景建議

- Point-E:適合需要快速生成 3D 模型的場景,如教育、初步設計、遊戲開發等。

- Shap-E:適合對 3D 模型品質要求較高的場景,如 AR/VR 應用、3D 列印、動畫製作等。

🔗 資源連結

參考資訊

by Rain Chu | 2 月 23, 2025 | AI, 程式開發

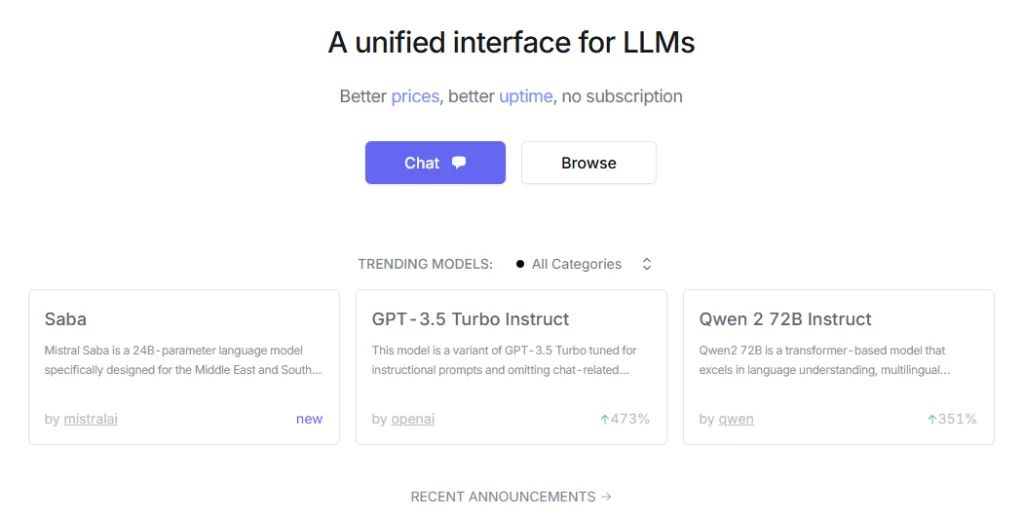

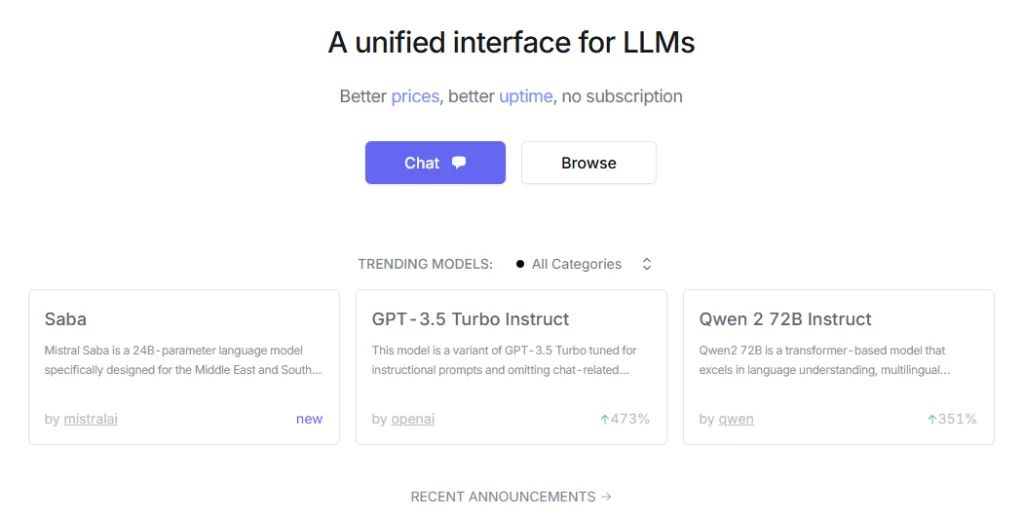

OpenRouter 是一個統一的大型語言模型(LLM)API 服務平台,可以讓使用者透過單一介面訪問多種大型語言模型。

主要特點:

- 多模型支援: OpenRouter 集成了多種預訓練模型,如 GPT-4、Gemini、Claude、DALL-E 等,按需求選擇適合的模型。

- 易於集成: 提供統一的 API 介面,方便與現有系統整合,無需自行部署和維護模型。

- 成本效益: 透過 API 調用,使用者無需購買昂貴的 GPU 伺服器,降低了硬體成本。

使用方法:

- 註冊帳號: 使用 Google 帳號即可快速註冊 OpenRouter。

- 選擇模型: 在平台上瀏覽並選擇適合的模型,部分模型提供免費使用。

- 調用 API: 使用統一的 API 介面,將選定的模型整合到您的應用中。

Cline 整合

OpenRouter 與 Cline 的整合為開發者提供了強大的 AI 編程體驗,Cline 是一款集成於 VSCode 的 AI 編程助手,支援多種大型語言模型(LLM),如 OpenAI、Anthropic、Mistral 等,透過 OpenRouter,Cline 能夠統一調用這些模型,簡化了不同模型之間的切換和管理,使用者只需在 Cline 的設定中選擇 OpenRouter 作為 API 提供者,並輸入相應的 API 金鑰,即可開始使用多種模型進行開發。這種整合不僅提升了開發效率,還降低了使用多模型的技術門檻。

DeepSeek R1

OpenRouter 現在也支援 DeepSeek R1 模型,DeepSeek R1 是一款高性能的開源 AI 推理模型,具有強大的數學、編程和自然語言推理能力。透過 OpenRouter,開發者可以在 Cline 中輕鬆調用 DeepSeek R1 模型,享受其強大的推理能力。這進一步豐富了開發者的工具選擇,讓他們能夠根據項目需求選擇最適合的模型。

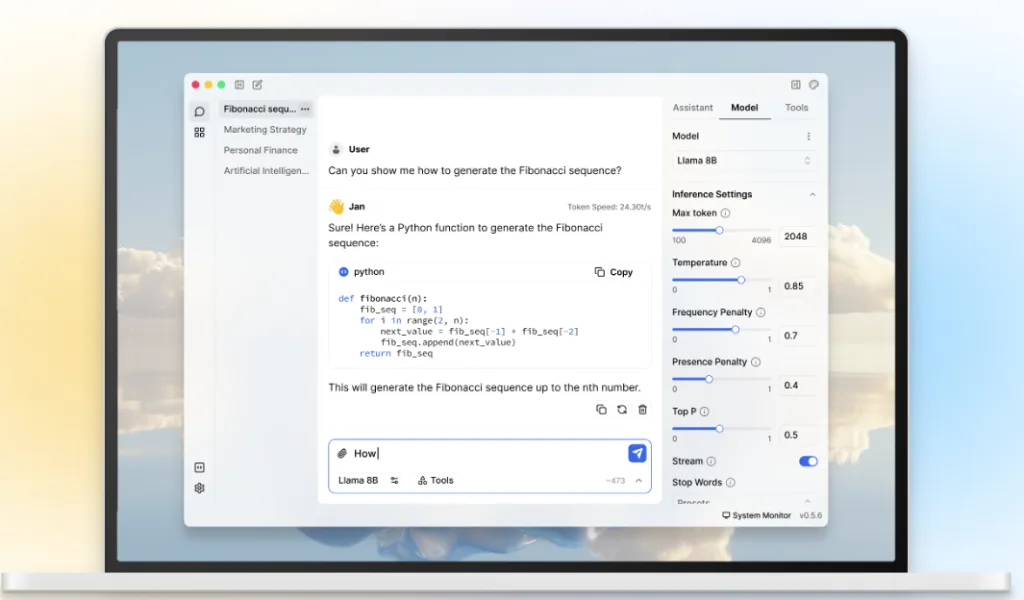

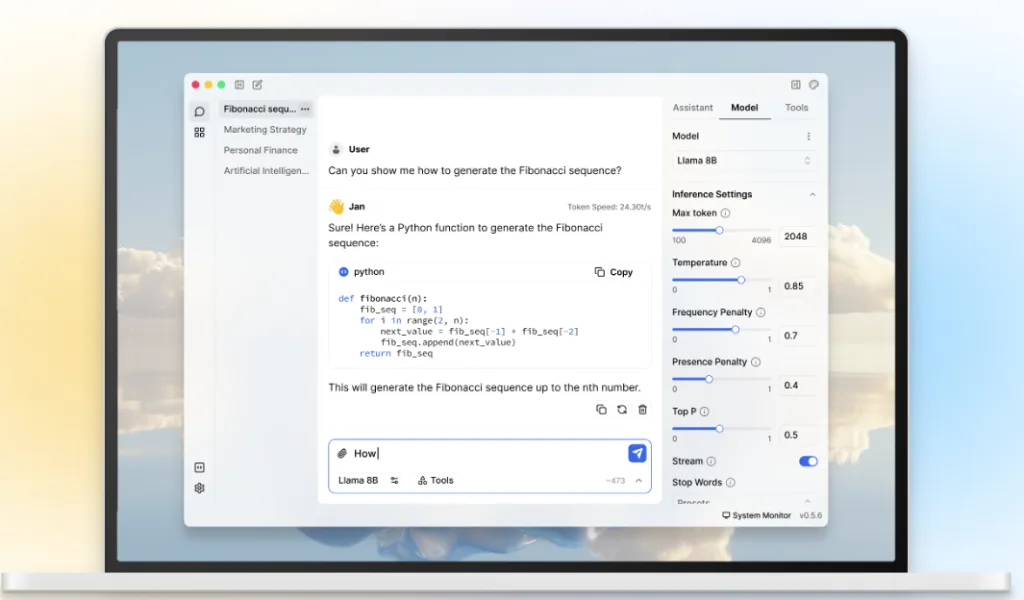

by rainchu | 12 月 24, 2024 | AI, Chat

Jan AI 是一款完全開源且支援多種平台(Windows, Linux, Mac)的人工智慧聊天助手,類似 ChatGPT 的功能,但可完全離線運行於使用者內部的電腦上。

主要特色:

- 離線運行:Jan 支援多種 AI 模型,如 Llama3、Gemma 或 Mistral,使用者可直接在本地端下載並運行這些模型,確保資料隱私。

- 模型中心:提供多樣化的模型選擇,使用者可根據需求下載並運行不同的 AI 模型。

- 雲端 AI 連接:在需要時,Jan 也能連接至更強大的雲端模型,如 OpenAI、Groq、Cohere 等,提供更高效的運算能力。

- 本地 API 伺服器:使用者可一鍵設置並運行與 OpenAI 相容的 API 伺服器,利用本地模型提供服務。

- 文件互動:實驗性功能,允許使用者與本地文件進行互動,提升工作效率。

開源與自訂化:

Jan 完全開源,使用者可根據個人需求進行自訂,並透過第三方擴充功能(Extensions)來增強系統功能,如雲端 AI 連接器、工具和資料連接器等。

隱私與資料擁有權:

Jan 強調使用者資料的隱私與擁有權,所有資料皆儲存在本地,並以通用格式保存,確保使用者對自身資料的完全控制。

下載與社群:

Jan 已在 GitHub 上獲得超過 2.4 萬顆星,並持續更新與改進。

相關資訊

by rainchu | 11 月 22, 2024 | AI, 程式開發

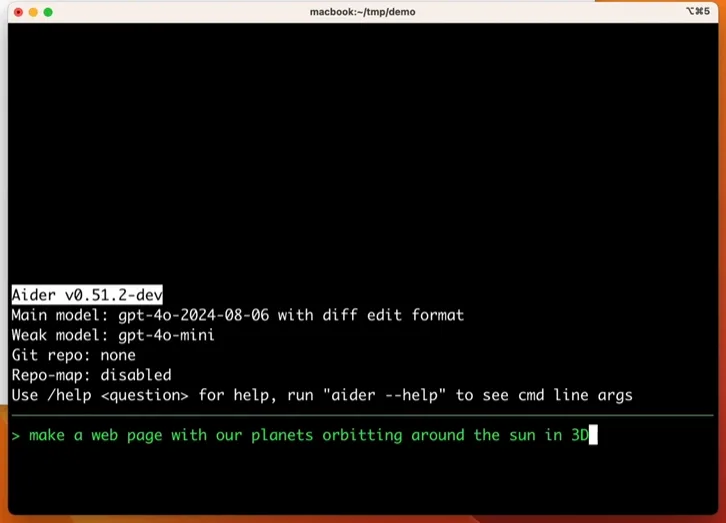

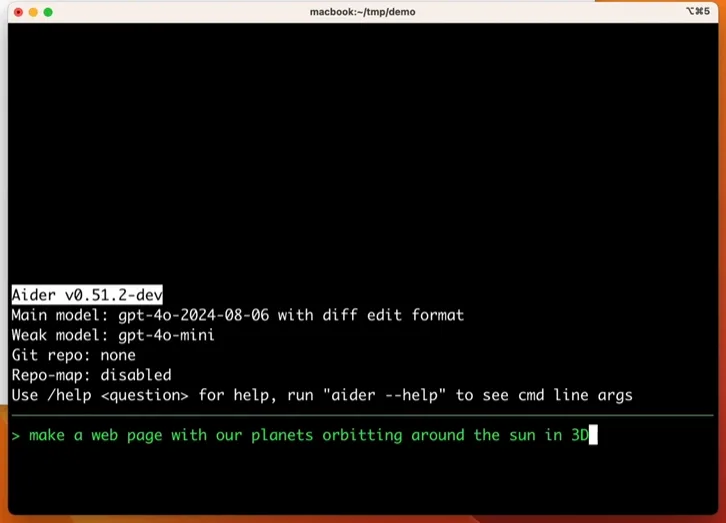

Aider 是一款突破性的 AI 程式設計助理,無論是終端操作還是透過瀏覽器,都可以享受與 Aider 的互動式編程體驗

多樣化運行模式

Aider 支援兩種運行模式:

- 本地模式:結合 Ollama 模型,支持用戶在本地執行各種大型語言模型(LLMs)。

- 瀏覽器模式:無需繁瑣的安裝配置,用戶只需透過瀏覽器即可啟動對話編程,輕鬆實現即時代碼編輯。

核心功能與亮點

Git 無縫整合

Aider 深度集成本地 Git 倉庫,讓程式碼管理變得簡單高效:

- 代碼編輯:直接使用提示詞請求代碼修改、錯誤修復或改進功能,變更將即時應用至源代碼。

- 自動提交:所有改動將自動生成具描述性的 Git 提交記錄,便於追蹤和審核。

直觀命令操作

用戶可通過多種命令與 Aider 互動,執行各類任務:

| 命令 | 說明 |

|---|

/add | 將檔案新增到聊天中,讓 GPT 可以編輯或詳細檢查這些檔案。 |

/ask | 提出與程式碼庫相關的問題,無需編輯任何檔案。 |

/chat-mode | 切換到新的聊天模式。 |

/clear | 清除聊天記錄。 |

/clipboard | 將剪貼簿中的圖片或文字新增到聊天中(可選擇提供圖片名稱)。 |

/code | 請求對程式碼進行修改。 |

/commit | 提交在聊天外進行的編輯到程式庫(提交資訊為可選)。 |

/diff | 顯示自上次訊息以來的變更差異。 |

/drop | 從聊天會話中移除檔案以釋放上下文空間。 |

/exit | 離開應用程式。 |

/git | 執行一個 Git 命令。 |

/help | 提出與 Aider 相關的問題。 |

/lint | 對提供的檔案進行 Lint 檢查並修復;若未提供檔案,則修復聊天中的檔案。 |

/ls | 列出所有已知檔案,並指出哪些檔案包含在聊天會話中。 |

/map | 輸出當前程式庫的地圖。 |

/map-refresh | 強制刷新程式庫地圖。 |

/model | 切換到新的大型語言模型。 |

/models | 搜尋可用的模型列表。 |

/quit | 離開應用程式。 |

/read-only | 將檔案新增到聊天中,僅供參考,不能編輯。 |

/reset | 刪除所有檔案並清除聊天記錄。 |

/run | 執行一個 Shell 命令,並可選擇將輸出新增到聊天中(別名:!)。 |

/test | 執行一個 Shell 命令,若退出碼非零,則將輸出新增到聊天中。 |

/tokens | 報告當前聊天上下文使用的 Token 數量。 |

/undo | 如果上次 Git 提交是由 Aider 完成的,則撤銷該提交。 |

/voice | 記錄並轉錄語音輸入。 |

/web | 擷取網頁內容,轉換為 Markdown,並新增到聊天中。 |

完整命令列表涵蓋從文件管理到模型切換的全方位需求。

多模型支持

Aider 支援廣泛的 LLM,包括但不限於:

- Ollama

- OpenAI

- Anthropic

- DeepSeek

- OpenRouter

安裝與使用

基礎安裝

透過 pip 安裝 Aider:

python3 -m pip install aider-chat

運行本地 Ollama 模型

export OLLAMA_API_BASE=http://127.0.0.1:11434

aider --model ollama/mistral

# Groq

export GROQ_API_KEY=sk-xx

aider --model groq/llama3-70b-8192

# OpenRouter

export OPENROUTER_API_KEY=sk-xx

# Or any other open router model

aider --model openrouter/<provider>/<model>

# List models available from OpenRouter

aider --models openrouter/

# Web

aider --browser

# GitHub 學習用

git clone https://github.com/mewmewdevart/SnakeGame

cd SnakeGame

aider

# 请解释这个项目的功能

# 这个项目是运用了哪些技术?

# 更改蛇的颜色为绿色,食物的颜色为红色

相關資料

Aider官網

Aider GitHub

近期留言